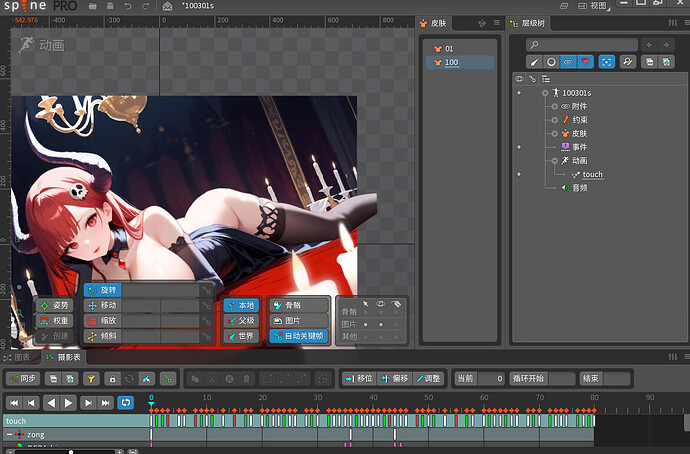

嗯…能跑可以下载bundle_sp/special下的spine并且自动还原文件名

import os

import requests

BASE64_CHARS = "ABCDEFGHIJKLMNOPQRSTUVWXYZabcdefghijklmnopqrstuvwxyz0123456789+/"

BASE64_VALUES = [0] * 128

for idx, char in enumerate(BASE64_CHARS):

BASE64_VALUES[ord(char)] = idx

HEX_CHARS = list('0123456789abcdef')

_t = ['', '', '', '']

UUID_TEMPLATE = _t + _t + ['-'] + _t + ['-'] + _t + ['-'] + _t + ['-'] + _t + _t + _t

INDICES = [i for i, x in enumerate(UUID_TEMPLATE) if x != '-']

def decode_uuid(base64_str):

if len(base64_str) != 22:

return base64_str

result = UUID_TEMPLATE.copy()

result[0] = base64_str[0]

result[1] = base64_str[1]

j = 2

for i in range(2, 22, 2):

lhs = BASE64_VALUES[ord(base64_str[i])]

rhs = BASE64_VALUES[ord(base64_str[i + 1])]

result[INDICES[j]] = HEX_CHARS[lhs >> 2]

j += 1

result[INDICES[j]] = HEX_CHARS[((lhs & 3) << 2) | (rhs >> 4)]

j += 1

result[INDICES[j]] = HEX_CHARS[rhs & 0xF]

j += 1

return ''.join(result)

def getresjson():

url1 = "https://eowgame.jcbgame.com/eow-jp-game/proj.confg.json"

response1 = requests.get(url1)

response1.raise_for_status()

data1 = response1.json()

special_value = data1.get("Config", {}).get("special")

if not special_value:

raise ValueError("无法获取 Config -> special 的值")

print(f"special = {special_value}")

url2 = f"https://eowgame.jcbgame.com/eow-jp-game/bundle_sp/special/config.{special_value}.json"

response2 = requests.get(url2)

response2.raise_for_status()

return response2.json()

data = getresjson()

uuids = data["uuids"]

vers_imp = data["versions"]["import"]

vers_nat = data["versions"]["native"]

paths = data["paths"]

vimp = {vers_imp[i]: vers_imp[i+1] for i in range(0, len(vers_imp), 2)}

vnat = {vers_nat[i]: vers_nat[i+1] for i in range(0, len(vers_nat), 2)}

BASE = "https://eowgame.jcbgame.com/eow-jp-game/bundle_sp/special"

output_dir = "eowout"

os.makedirs(output_dir, exist_ok=True)

def has_sp_skeleton_data(obj):

if isinstance(obj, list):

if len(obj) > 0 and obj[0] == "sp.SkeletonData":

return obj

for item in obj:

found = has_sp_skeleton_data(item)

if found:

return found

elif isinstance(obj, dict):

for value in obj.values():

found = has_sp_skeleton_data(value)

if found:

return found

return None

for idx, sid in enumerate(uuids):

if '@' in sid:

uuid_base64, ext = sid.split('@', 1)

else:

uuid_base64, ext = sid, None

uuid = decode_uuid(uuid_base64)

full_uuid = f"{uuid}@{ext}" if ext else uuid

if idx not in vimp or idx not in vnat:

continue

imp_ver = vimp[idx]

nat_ver = vnat[idx]

json_url = f"{BASE}/import/{uuid[:2]}/{full_uuid}.{imp_ver}.json"

try:

response = requests.get(json_url)

response.raise_for_status()

json_data = response.json()

except Exception as e:

print(f"[!] 下载失败: {json_url} ({e})")

continue

if not has_sp_skeleton_data(json_data):

continue

path_info = paths.get(str(idx))

if not path_info:

print(f"[!] 未找到 paths 映射: idx={idx}")

continue

res_path = path_info[0]

folder = os.path.join(output_dir, *res_path.split("/"))

os.makedirs(folder, exist_ok=True)

base_name = os.path.basename(res_path)

try:

atlas_raw = json_data[5][0][3]

with open(os.path.join(folder, base_name + ".atlas"), "w", encoding="utf-8") as f:

f.write(atlas_raw)

print(f"[+] 写入 atlas 成功:{base_name}.atlas")

pngdata = json_data[1]

for i in range(len(pngdata)):

pnguuid = (decode_uuid((pngdata[i])[:22]))

idxpng = uuids.index((pngdata[i])[:22])

nat_verpng = vnat[idxpng]

pngurl = f"{BASE}/native/{pnguuid[:2]}/{pnguuid}.{nat_verpng}.png"

try:

png_data = requests.get(pngurl)

png_data.raise_for_status()

png_tempname = f"{base_name}_{i+1}" if i >= 1 else f"{base_name}"

png_path = os.path.join(folder, png_tempname + ".png")

with open(png_path, "wb") as f:

f.write(png_data.content)

print(f"[+] 下载 png 成功:{png_tempname}.png")

except Exception as e:

print(f"[!] 下载 .png 失败: {pngurl} ({e})")

continue

except Exception as e:

print(f"[!] 写入 .atlas 失败: {e}")

continue

skel_url = f"{BASE}/native/{uuid[:2]}/{uuid}.{nat_ver}.bin"

try:

skel_data = requests.get(skel_url)

skel_data.raise_for_status()

skel_path = os.path.join(folder, base_name + ".skel")

with open(skel_path, "wb") as f:

f.write(skel_data.content)

print(f"[+] 下载 skel 成功:{base_name}.skel")

except Exception as e:

print(f"[!] 下载 .skel 失败: {skel_url} ({e})")

continue

print(f"[√] 导出成功: {res_path}")

参考获取到的资源列表

D:.

└─spine

├─chapter

│ ├─chapters01

│ │ └─chapters01

│ │ └─images

│ ├─chapters02

│ │ └─chapters02

│ │ └─images

│ ├─chapters03

│ │ └─chapters03

│ │ └─images

│ ├─chapters04

│ │ └─chapters04

│ │ └─images

│ ├─chapters05

│ │ └─chapters05

│ │ └─images

│ ├─chapters06

│ │ └─chapters06

│ │ └─images

│ ├─chapters07

│ │ └─chapters07

│ │ └─images

│ ├─chapters08

│ │ └─chapters08

│ │ └─images

│ ├─chapters09

│ │ └─chapters09

│ │ └─images

│ └─chapters10

│ └─chapters10

│ └─images

└─date

├─100101s

│ └─100101s

│ └─images

├─100102s

│ └─100102s

│ └─images

├─100103s

│ └─100103s

│ └─images

├─100201s

│ └─100201s

│ └─images

├─100202s

│ └─100202s

│ └─images

├─100203s

│ └─100203s

│ └─images

├─100301s

│ └─100301s

│ └─images

├─100302s

│ └─100302s

│ └─images

├─100303s

│ └─100303s

│ └─images

├─100401s

│ └─100401s

│ └─images

├─100402s

│ └─100402s

│ └─images

│ └─man

├─100403s

│ └─100403s

│ └─images

│ └─man

├─100601s

│ └─100601s

│ └─images

├─100602s

│ └─100602s

│ └─images

├─100603s

│ └─100603s

│ └─images

├─100701s

│ └─100701s

│ └─images

├─100702s

│ └─100702s

│ └─images

├─100703s

│ └─100703s

│ └─images

├─101201s

│ └─101201s

│ └─images

├─101202s

│ └─101202s

│ └─images

├─101203s

│ └─101203s

│ └─images

├─101401s

│ └─101401s

│ └─images

├─101402s

│ └─101402s

│ └─images

├─101403s

│ └─101403s

│ └─images

├─101501s

│ └─101501s

│ └─images

├─101502s

│ └─101502s

│ └─images

├─101503s

│ └─101503s

│ └─images

├─101601s

│ └─101601s

│ └─images

├─101602s

│ └─101602s

│ └─images

├─101603s

│ └─101603s

│ └─images

├─101901s

│ └─101901s

│ └─images

├─101902s

│ └─101902s

│ └─images

├─101903s

│ └─101903s

│ └─images

├─101911s

│ └─101911s

│ └─images

├─101912s

│ └─101912s

│ └─images

├─101913s

│ └─101913s

│ └─images

├─102201s

│ └─102201s

│ └─images

├─102202s

│ └─102202s

│ └─images

├─102203s

│ └─102203s

│ └─images

├─102301s

│ └─102301s

│ └─images

├─102302s

│ └─102302s

│ └─images

├─102303s

│ └─102303s

│ └─images

├─102401s

│ └─102401s

│ └─images

├─102402s

│ └─102402s

│ └─images

├─102403s

│ └─102403s

│ └─images

├─102501s

│ └─102501s

│ └─images

├─102502s

│ └─102502s

│ └─images

├─102503s

│ └─102503s

│ └─images

├─102601s

│ └─102601s

│ └─images

├─102602s

│ └─102602s

│ └─images

├─102603s

│ └─102603s

│ └─images

├─103301s

│ └─103301s

│ └─images

├─103302s

│ └─103302s

│ └─images

├─103303s

│ └─103303s

│ └─images

├─104401s

│ └─104401s

│ └─images

├─104402s

│ └─104402s

│ └─images

├─104403s

│ └─104403s

│ └─images

├─105801s

│ └─105801s

│ └─images

├─105802s

│ └─105802s

│ └─images

└─105803s

└─105803s

└─images

anima的

import os

import requests

BASE64_CHARS = "ABCDEFGHIJKLMNOPQRSTUVWXYZabcdefghijklmnopqrstuvwxyz0123456789+/"

BASE64_VALUES = [0] * 128

for idx, char in enumerate(BASE64_CHARS):

BASE64_VALUES[ord(char)] = idx

HEX_CHARS = list('0123456789abcdef')

_t = ['', '', '', '']

UUID_TEMPLATE = _t + _t + ['-'] + _t + ['-'] + _t + ['-'] + _t + ['-'] + _t + _t + _t

INDICES = [i for i, x in enumerate(UUID_TEMPLATE) if x != '-']

def decode_uuid(base64_str):

if len(base64_str) != 22:

return base64_str

result = UUID_TEMPLATE.copy()

result[0] = base64_str[0]

result[1] = base64_str[1]

j = 2

for i in range(2, 22, 2):

lhs = BASE64_VALUES[ord(base64_str[i])]

rhs = BASE64_VALUES[ord(base64_str[i + 1])]

result[INDICES[j]] = HEX_CHARS[lhs >> 2]

j += 1

result[INDICES[j]] = HEX_CHARS[((lhs & 3) << 2) | (rhs >> 4)]

j += 1

result[INDICES[j]] = HEX_CHARS[rhs & 0xF]

j += 1

return ''.join(result)

def getresjson():

url1 = "https://eowgame.jcbgame.com/eow-jp-game/bundle/version.json"

response1 = requests.get(url1)

response1.raise_for_status()

data1 = response1.json()

anima_entry = next((item for item in data1 if item.get("abName") == "anima"), None)

if not anima_entry:

raise ValueError("未找到 abName 为 'anima' 的数据")

anima_version = anima_entry.get("version")

if not anima_version:

raise ValueError("anima 的 version 字段为空")

print(f"anima version = {anima_version}")

url2 = f"https://eowgame.jcbgame.com/eow-jp-game/bundle/anima/cc.config.{anima_version}.json"

response2 = requests.get(url2)

response2.raise_for_status()

return response2.json()

data = getresjson()

uuids = data["uuids"]

vers_imp = data["versions"]["import"]

vers_nat = data["versions"]["native"]

paths = data["paths"]

vimp = {vers_imp[i]: vers_imp[i+1] for i in range(0, len(vers_imp), 2)}

vnat = {vers_nat[i]: vers_nat[i+1] for i in range(0, len(vers_nat), 2)}

BASE = "https://eowgame.jcbgame.com/eow-jp-game/bundle/anima"

output_dir = "eowoutan"

os.makedirs(output_dir, exist_ok=True)

def has_sp_skeleton_data(obj):

if isinstance(obj, list):

if len(obj) > 0 and obj[0] == "sp.SkeletonData":

return obj

for item in obj:

found = has_sp_skeleton_data(item)

if found:

return found

elif isinstance(obj, dict):

for value in obj.values():

found = has_sp_skeleton_data(value)

if found:

return found

return None

for idx, sid in enumerate(uuids):

if '@' in sid:

uuid_base64, ext = sid.split('@', 1)

else:

uuid_base64, ext = sid, None

uuid = decode_uuid(uuid_base64)

full_uuid = f"{uuid}@{ext}" if ext else uuid

if idx not in vimp or idx not in vnat:

continue

imp_ver = vimp[idx]

nat_ver = vnat[idx]

json_url = f"{BASE}/import/{uuid[:2]}/{full_uuid}.{imp_ver}.json"

try:

response = requests.get(json_url)

response.raise_for_status()

json_data = response.json()

except Exception as e:

print(f"[!] 下载失败: {json_url} ({e})")

continue

if not has_sp_skeleton_data(json_data):

continue

path_info = paths.get(str(idx))

if not path_info:

print(f"[!] 未找到 paths 映射: idx={idx}")

continue

res_path = path_info[0]

folder = os.path.join(output_dir, *res_path.split("/"))

os.makedirs(folder, exist_ok=True)

base_name = os.path.basename(res_path)

try:

atlas_raw = json_data[5][0][3]

with open(os.path.join(folder, base_name + ".atlas"), "w", encoding="utf-8") as f:

f.write(atlas_raw)

print(f"[+] 写入 atlas 成功:{base_name}.atlas")

pngdata = json_data[1]

for i in range(len(pngdata)):

pnguuid = (decode_uuid((pngdata[i])[:22]))

idxpng = uuids.index((pngdata[i])[:22])

nat_verpng = vnat[idxpng]

pngurl = f"{BASE}/native/{pnguuid[:2]}/{pnguuid}.{nat_verpng}.png"

try:

png_data = requests.get(pngurl)

png_data.raise_for_status()

png_tempname = f"{base_name}_{i+1}" if i >= 1 else f"{base_name}"

png_path = os.path.join(folder, png_tempname + ".png")

with open(png_path, "wb") as f:

f.write(png_data.content)

print(f"[+] 下载 png 成功:{png_tempname}.png")

except Exception as e:

print(f"[!] 下载 .png 失败: {pngurl} ({e})")

continue

except Exception as e:

print(f"[!] 写入 .atlas 失败: {e}")

continue

skel_url = f"{BASE}/native/{uuid[:2]}/{uuid}.{nat_ver}.bin"

try:

skel_data = requests.get(skel_url)

skel_data.raise_for_status()

skel_path = os.path.join(folder, base_name + ".skel")

with open(skel_path, "wb") as f:

f.write(skel_data.content)

print(f"[+] 下载 skel 成功:{base_name}.skel")

except Exception as e:

print(f"[!] 下载 .skel 失败: {skel_url} ({e})")

continue

print(f"[√] 导出成功: {res_path}")

速度比较慢我也莫得办法。。。不会写互斥锁多线程